Thought(s) on changing S@h limit of: 100tasks/mobo ...to a: ##/CPUcore

Message boards :

Number crunching :

Thought(s) on changing S@h limit of: 100tasks/mobo ...to a: ##/CPUcore

Message board moderation

| Author | Message |

|---|---|

Stubbles Stubbles Send message Joined: 29 Nov 99 Posts: 358 Credit: 5,909,255 RAC: 0

|

Hey gurus, Since the thread: Panic Mode On (103) Server Problems? has gone on a few drastic tangents, I am reposting a slightly modified version of my lost tangent post that has gone unanswered due to (I'm assuming) the high volume of posts in that thread. One of Al's posts gave me an idea that could be included in: 1. a future major release of the Boinc Client, or 2. a S@h server side code modification I remember reading a post (which I can't find) by someone from the staff (Dr Korpela?) about NOT being able to program Boinc to recognize multiple CPUs ...but a reference about BOINC only being able to see the # of CPU cores. Maybe it would be worth looking into that a bit further?!? Here's my thinking: Why not put a new deviceTaskLimit for/per: CPUcores (instead of the current perMotherboard limit) Obviously 100tasks/core would be WAY too high ...but since we have the daily updates of S@H CPUs, we might be able to come up with a scenario that might be better than the current 100task/mobo limit. It could easily help satisfy multiCPU rigs' crazy unger (like Al's LotzaCores) without having any impact on slow rigs that are currently limited by the 10day limit. We'd just have to determine a good max/CPUcore that wouldn't have a negative impact on: 1. servers; and 2. mid range rigs with 4-8cores but benefit greatly: high end Rigs that have 8-24cores As a quick initial calculation: for different CPU categories (with and w/o GPU) we could start identifying the impact of: 25/CPUcore (since for a quad core, that's 100/4), and compare that to the existing limit of: 100tasks/mobo. My guess is: 25/core is way too high to minimize server impact. Any thoughts S@h gurus? |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14690 Credit: 200,643,578 RAC: 874

|

Let's split that into two parts: what BOINC can do, and what SETI wants to do with BOINC. First BOINC. What can BOINC do to distinguish these two cases?   Two different machines - one a single i7 with four cores hyperthreaded, and the other dual Xeon CPUs, each with four cores and no hyperthreading. Come to that, how would you distinguish the single CPU from the dual CPU, without using clues like my host list and the different memory sizes? If we can't do it, how would BOINC detect the difference (under all supported operating systems)? All that BOINC currently records - and I think all that is really important - is the number of threads available via the operating system. 8 in each case. Secondly, what does SETI want? (the 100 tasks per motherboard is their choice) Their stated aim is to allow - indeed, encourage - as many individuals as possible to join in the fun - and possible excitement - of the scientific search for Extra-Terrestrial Intelligence using distributed computing. And out of that has grown the facility - BOINC - for distributing other scientific endeavours via distributed computing, using free software and minimalist server hardware. Although as the first, the biggest, and the best, SETI has moved on beyond the tiny servers of 17 years ago, it's still very much a shoe-string operation, running on fumes and the occasional $10 donation from volunteers here. I exaggerate for comic effect, but not by much. So, SETI wants to service as many users as possible, using as few servers as possible. That means rationing. We tried it without limits a year or two back, and when a 'stop splitting' watchdog daemon failed, the database nearly blew up. IIRC, it took over a week to get the project back on an even keel. Now, we're running GBT data under the auspices of Breakthrough Listen, and GBT data runs better on CPUs, and Breakthrough Listen has pots of money. So, it would be entirely possible to start from a perceived need for more processing capacity to keep pace with GBT recording, proceed via server upgrading (hardware and software), and end up by increasing the 'per motherboard' task limits. In that order. In the meantime, anybody who purchases a motherboard populated with enough CPUs to process over 100 tasks during the maintenance window every Tuesday, does so with the foreknowledge that it will have a short period of idleness every week. What they do with that knowledge is their own business. What I've advocated in the past is that everybody who spends their own, personal, disposable money on building hardware just for SETI use, and nothing else should voluntarily add a small percentage donation to their budget, towards the server and infrastructure components of their hobby. 1%, 5%, 10% - pick your own number. And completely unecessary if you were buying the computer anyway, for general business or home use. It's just the dedicated crunch-heads (like me, too) who I'm suggesting divvy up the funds into both server and client shares, rather than putting 100% into their own hardware and assuming the servers work for free. |

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

Not to undermine your argument but just wanted to say It's pretty trivial to do this in Win32 -- even detecting HT isn't hard -- I'm sure Linux is the same. |

Stubbles Stubbles Send message Joined: 29 Nov 99 Posts: 358 Credit: 5,909,255 RAC: 0

|

I just reread my post and I forgot to consider these: 1. Is the 100/mobo limit programmed as a seperate variable/constant than the 100/gpu limit? 2. If the code is on the server side, is that a huge issue? I'm guessing in could be part of the Boinc architecture code, or specific code for the S@h implementation. 3. Should I move this type of thread to the Beta forum? Cheers, RobG [edit]I wrote this post witout having done a refresh on the thread...so I hadn't seen Richard's great post. I should have mentionned that I wasn't concerned about differenciating at 1st the # of cores with or w/o HT enabled. The cpu link in the OP already seems to know how many cores a rig has. Some Xeons even have double (sometimes triple) entries for rigs with HT off and on. I hope someone remembers the detail of the staff post that I can't find :-/ This post is an offshoot of a post from earlier this month that ...hmmm...isn't my proudest moment. I didn't plan on refering of linking to it...but I think it will be beneficial for the wider context. You'll probably get the gist from reading my last post in the thread[/e] |

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

Hi, We've hit the moment when 100/GPU is not enough for 300 minutes (5 hr) even if all are GBT tasks. Just my $25/month (even though it is not showing properly). To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

HAL9000 HAL9000 Send message Joined: 11 Sep 99 Posts: 6534 Credit: 196,805,888 RAC: 57

|

I don't know the method that is currently being used to detect the # of processors, but I'm aware that there are cpuid functions that provide core/thread counts. That may also provide the socket count for the system, or could be somewhat wonky. Providing two lists of core 0,1,2,3 for a dual 4 core MB. If the CPU socket count can be determined. Then having a limit of tasks per CPU socket would be helpful for multi CPU systems. SETI@home classic workunits: 93,865 CPU time: 863,447 hours  Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[ Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[

|

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13959 Credit: 208,696,464 RAC: 304

|

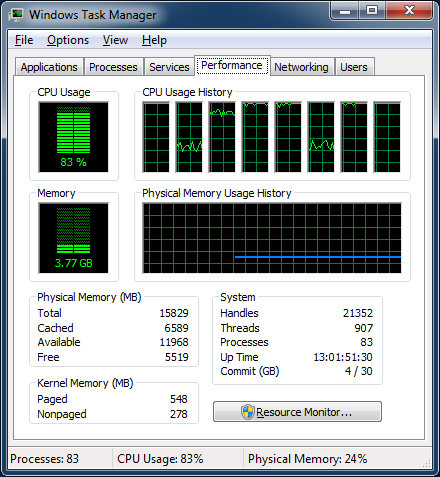

I don't know the method that is currently being used to detect the # of processors, but I'm aware that there are cpuid functions that provide core/thread counts. That may also provide the socket count for the system, or could be somewhat wonky. Providing two lists of core 0,1,2,3 for a dual 4 core MB. On a couple of hardware monitoring programmes I've got, they both give the number of CPUs, Number of cores, and Number of threads. Edit- here is an image from Al's latest monster cruncher thread of Coretemp's CPUs/Cores/Threads display.  http://i.imgur.com/OhBdoT7.jpg Grant Darwin NT |

MarkJ  Send message Joined: 17 Feb 08 Posts: 1139 Credit: 80,854,192 RAC: 5

|

Do we really need to differentiate between processors and thread counts? I mean what would I want to do differently in this case? From a task scheduling point of view I have the same number of available threads that want feeding regardless of my physical processor count. BOINC blog |

|

Kiska Send message Joined: 31 Mar 12 Posts: 302 Credit: 3,067,762 RAC: 0

|

The limit is 100 CPU units per computer, regardless of your CPU count. Someone here (looks at Al) has a 56 thread machine, which means that machine will run out of work before the weekly downtime finishes. That computer is a dual socket machine that has I believe E5-2xxx v2 procs. If we were to change to per CPU socket then all computers will last through the downtime, but there is consideration that the server is already stressed enough amount of work already sent(out in the field). |

rob smith  Send message Joined: 7 Mar 03 Posts: 22815 Credit: 416,307,556 RAC: 380

|

I tend to agree with MarkJ. With increasing CPU core/thread counts it is becoming obvious that the 100 per CPU (or is it 100 per motherboard???) is becoming somewhat obsolete. I think something like 100 per 8 cores (or threads) would be a good starting point. Thus 100 for 1-8, 200 for 9-16 et-seq. The data required is already on the servers, requires very little extra work to come to the number of tasks each host could have "in progress". Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

|

AMDave Send message Joined: 9 Mar 01 Posts: 234 Credit: 11,671,730 RAC: 0

|

I tend to agree with MarkJ. For clarification, see Message 1806877 For additional discussion: *EDIT*

|

rob smith  Send message Joined: 7 Mar 03 Posts: 22815 Credit: 416,307,556 RAC: 380

|

Ah, but I think Richard and I were talking about different things - I am talking about the number of threads/cores in-situ, not the number in use. For every computer on SETI you can see that number: Authentic AMD AMD FX-8370 Eight-Core Processor [Family 21 Model 2 Stepping 0] (8 processors) In the same way you can see the number of GPUs available (nothing about number of concurrent tasks): [2] NVIDIA GeForce GTX 980 (4095MB) driver: 353.90 OpenCL: 1.2 and some basic information about the operating system: Microsoft Windows 7 Professional x64 Edition, Service Pack 1, (06.01.7601.00) Richard was looking for a more advanced figure, which will not be so easy to extract server-side (if indeed it is possible). Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

|

Kiska Send message Joined: 31 Mar 12 Posts: 302 Credit: 3,067,762 RAC: 0

|

GenuineIntel Are there 2 CPUs or 1? BOINC can't distinguish if it has 2 or more sockets. |

|

I3APR Send message Joined: 23 Apr 16 Posts: 99 Credit: 70,717,488 RAC: 0

|

With increasing CPU core/thread counts it is becoming obvious that the 100 per CPU (or is it 100 per motherboard???) is becoming somewhat obsolete. I think something like 100 per 8 cores (or threads) would be a good starting point. Thus 100 for 1-8, 200 for 9-16 et-seq. Running 4 servers with 32 CPU HT and 1 with 24 not HT, I'm really interested on your proposal. Moreover, I seldom have the luxury of a 24/24h internet connection like I'm having for the WOW, so after 5/6 hours I normally run out of WUs. A. |

Al  Send message Joined: 3 Apr 99 Posts: 1682 Credit: 477,343,364 RAC: 482

|

My guess is you'd need a small sub-database with all the relatively recent Intel and AMD CPU's populated with them, and an identifier that lists the number of cores each one has, and then simple division would determine the # of processors? Just tossing out ideas. *edit* on 2nd thought, that probably wouldn't work out as a precise determination, as was noted in the post above, if you have 2 identical dual proc systems running, one with no HT, and the other with, it couldn't know that the one without it is a dual proc setup with my logic. OTOH, it would know with decent certainty that it has at least 2 procs in it, to get to the 48 total, but also could have 4, however unlikely that is. So, it'd be rewarding ppl with dual procs running HT I suppose, but it would be a start?

|

rob smith  Send message Joined: 7 Mar 03 Posts: 22815 Credit: 416,307,556 RAC: 380

|

Kiska - Doing a simple "core/thread" count it makes no odds. I know it wouldn't be right for everyone, but it would be "less wrong" for the majority of high count users like GazzaVR. Using my proposal four of his servers would each get 400 in their tanks, while the other one would get 300. Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

rob smith  Send message Joined: 7 Mar 03 Posts: 22815 Credit: 416,307,556 RAC: 380

|

Al - no need for such a complication - the data is already there, on the SETI servers, they "know" how many cores/threads a computer is reporting, and that's all that is taken into account. Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

Al  Send message Joined: 3 Apr 99 Posts: 1682 Credit: 477,343,364 RAC: 482

|

Oh, well, ok then! :-)

|

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14690 Credit: 200,643,578 RAC: 874

|

Richard was looking for a more advanced figure, which will not be so easy to extract server-side (if indeed it is possible). The thread has become somewhat confusing because my post has become the opening post, when really (as you can see) I was replying to message 1811831 (not currently linkable). But it can be read, if you know how and where to look - and the screengrabs about 8 HT threads on one socket, versus 8 threads on two full four-core sockets, were actually a slightly over-hasty response to the statement I remember reading a post (which I can't find) by someone from the staff (Dr Korpela?) about NOT being able to program Boinc to recognize multiple CPUs But the primary interest of the thread starter was to explore the possibility of a graduated "max [CPU] tasks in progress" value dependent on the numbers of threads available in total - which, as people have said, BOINC already has available. The thread starter suggested: We'd just have to determine a good max/CPUcore that wouldn't have a negative impact on: and then convince the staff that the change would be worth implementing, from the project's point of view. Actually, if you look at http://boinc.berkeley.edu/trac/wiki/ProjectOptions#Joblimits, SETI could be using <max_wus_in_progress> N </max_wus_in_progress> already, but has chosen instead to operate the "Job limits (advanced)" in the following section. They must have had a reason for choosing to do that. |

|

AMDave Send message Joined: 9 Mar 01 Posts: 234 Credit: 11,671,730 RAC: 0

|

Richard, could you provide a link or the title to that thread.  I'd like to refresh my memory. |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.