Linux, Nvidia 750-TI and Multiple Tasks.

Message boards :

Number crunching :

Linux, Nvidia 750-TI and Multiple Tasks.

Message board moderation

| Author | Message |

|---|---|

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

I have installed an Nvidia 750-TI on a Linux server and can now run both MBs and APs with it using setiathome_x41zc_x86_64-pc-linux-gnu_cuda60 and ap_7.05r2728_sse3_linux64 respectively. In reading about others who are running GPUs, it seems that if you run two, or even occasionally three, WUs simultaneously on the GPU you gain in efficiency. That is not what I have found with the 750-TI on my server. What I have found is that if I run two WUs at the same time, they individually take around twice as long so there is no gain and actually, based on the power consumed and the card temperatures, the card might even be working a little less hard with two WUs rather than one. Is there something I am missing or not doing that I should be doing other than reserving one CPU core for the card? I have not tried reserving two cores when doing two GPU tasks because “top†always reports less than 10% utilization by the one core that is being used by the GPU. I also tried playing with <GPU_usage> and <cpu_usage> without much effect. I am guessing that the switching overhead accounts for the slight decrease in efficiency with two WUs over one so perhaps there is nothing to be gained. On the other hand using 271MB of the 2 GB of VRAM available for one WU while only using 40 watts of power makes it seem that there is more the card could be doing. |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

Unfortunately I don't know enough about the Linux driver model to say things with authority, but can point out the reasons it works like that on Windows so you can compare. First main point is that the reason running multiple Cuda/OpenCL tasks on Windows is effective, is the driver architecture has considerable latency (i.e. stalls for some period when issued a command). Because of the way the applications have had to scale from very small to very large GPUs, the current (let's call them 'traditional') applications are quite 'chatty', and make limited use of Cuda-streams, which is another finer grained latency hiding mechism. So for now on Windows at least, running multiple tasks per GPU amounts to a coarse grained and not-super-efficient latency hiding mechanism, that is pretty hard on cache mechanisms, PCIe and drivers. Second point is that the Linux drivers are I believe (again limited knowledge here) built in as kernel modules (while the Windows ones involve 'user mode drivers'. Leaving out a whole swathe of DirectX related commands they are probably substantially leaner and able to use some GPU features more directly (manifesting in a lower latency). That's important because if you try to hide latencies that aren't there, you'll get the extra costs imposed with extra tasks ( Cache abuse and OS scheduling overheads ) without gaining much from latency hiding (because there isn't as much there to hide). Lastly, the 750ti itself doesn't have a huge number of multiprocessors or high bandwidth (even though certainly much 'larger' than low power cards in previous generations). Those considerations form a performance ceiling highly dependant on the application design, which is over-due for some major changes. There are a lot of changes happening simultaneously, and I'll probably miss some, but here they are in bullet points: - We (Petri33 and myself) are building and testing more use of Cuda streams and 'larger' code into some application areas, as well as reducing 'chattiness' - Cuda 7, which came out recently, has better support for Maxwell architecture, which combined with Kepler 2 ( GK110, GTX780 etc ) changes involved big shifts in the way parallelism is done on Big K onwards, which we are still coming to grips with. This also mandates 64 bit, which has a performance penalty on the GPUs through larger addresses chewing up registers, but the hope is that improved mechanisms might bury those costs. - Windows 10 is also moving to a lower latency driver architecture, so things will change with respect to optimal number of tasks there as well (to some extent even on earlier OS where the drivers will change even though DirectX12 won't be available on older gen) That all amounts to a picture where in the future running fewer tasks will probably be better ( more efficient, higher overall throughput etc), but will still vary by OS, drivers, GPU generation, application(s), and your particular goals. Pretty complex, but ongoing maturation of GPGPU has meant trying to manage these changes, which hasn't been without some pretty hefty bumps in the road. "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

I also tried playing with <GPU_usage> and <cpu_usage> without much effect. Those are hints to the Boinc scheduler, rather than application controls. For the time being utilisation and bandwidth considerations are unthrottled, therefore 'controlled' by whatever system bottleneck is the tightest. With the current generation of applications on higher performance cards, the most dominant bottleneck is driver latency. This is likely to change in x42 series, as I move to completely different mechanisms for GPU synchronisation (after our Cuda streams testing as x41zd). The goals for x42 include a kindof time based work issue, which would be configurable for different user scenarios (via a simple tool). "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

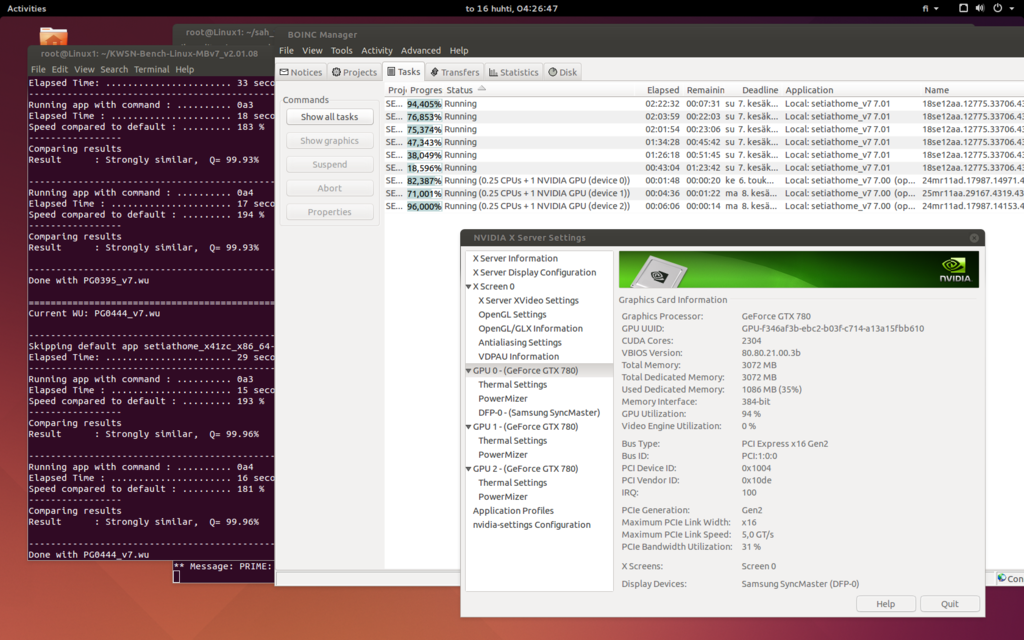

Unfortunately I don't know enough about the Linux driver model to say things with authority, but can point out the reasons it works like that on Windows so you can compare. Here is a snapshot of my desktop just now when going to sleep for an hour and a half before a working day. (I'm running one at a time per card. When testing run times of my latest compilations I shut down the boincmgr.)  [/img] [/img]To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 20252 Credit: 7,508,002 RAC: 20

|

Nice shot there! And good effort. Not sure you can get much better than 94% GPU utilisation for any system... You might squeeze a bit more by turning off the desktop compositing and some of the other eye-candy 'bling' effects. (Never thought I'd be having to advise to turn off 'blinging' for Linux !!) Happy fast x3 x2304 = x6912 crunchin'! Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

I have looked at the GPU usage with Nvidia profiler and identified a few places where computation can overlap more that it does now. So I hope to make some advances still. Autocorrelations is one place to start with. -- petri33 To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

Nice shot there! And good effort. I would say 94% is darn good, particularly for Linux. After reading Jason's postings (Thank you) it looks like I am stuck at least for the time being at around 55% with my 750-TI. I am basing that on the 40 watt increase in power the PC draws when it is working. The "NVIDIA-SMI 346.47" only reports temperature (45C) and fan speed (32%). The good news is I do not think I will ever have to worry the card failing from overworking it :). |

|

castor Send message Joined: 2 Jan 02 Posts: 13 Credit: 17,721,708 RAC: 0

|

It's probably more than 55% though. Here (750Ti also) the cuda60 app uses about ~86% when running one WU at a time. By running two it jumps to ~99% (as reported by nvidia-settings). This is when the card is plugged into the "secondary" PCIe slot (4xlink). Oddly (to me at least), when plugging it to the primary slot utilization gets close to 99% already with one WU. |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

hmm, comparing differences in those hosts could be well worthwhile. "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

hmm, comparing differences in those hosts could be well worthwhile. Here is some information on my server. If there is something else I should be showing, let me know. Motherboard: Supermicro, X10SAE CPU: Intel(R) Xeon(R) CPU E3-1230 v3 @ 3.30GHz (3500 GHz) RAM 8 GB (2 of 4 GB DDR3 1600 MHz) Slot Information used by the 750-TI: SLOT6 PCI-E 3.0 X16 Video Card: MSI GEFORCE GTX 750TI (N750TI TF 2GD5/OC) I am running the CPU with Hyper-threading with seven processes for the CPU tasks and one available for the GPU. Utilization reported by "top" is generally 99% or better for the CPU processes doing CPU tasks and 20% to 25% for the process handling the GPU. |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

Since you are running hyperthreaded, the cache, timeslices and execution resources are split. If you free an extra (virtual) core, you may see some improvement. "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

Since you are running hyperthreaded, the cache, timeslices and execution resources are split. If you free an extra (virtual) core, you may see some improvement. I will try that again with just one task on the GPU but as I recall I tried that configuration when trying to run two tasks on the GPU. It didn't help at all and I figured all I ended up doing was losing what the missing virtual core might have produced for work. Edit: I am now only running 6 virtual cores. It will be a while before some results are posted with times but I suspect there will not be much of an improvement if any. I am basing that on "top" which is still showing only one virtual core being used by the GPU at 20% to 25%. In addition the GPU temperature has not changed. I cannot really say much about the power change because dropping the 7th task changed what the CPU was drawing so that changed the baseline I was using. The task times will tell for sure. |

Mike Mike Send message Joined: 17 Feb 01 Posts: 34255 Credit: 79,922,639 RAC: 80

|

You need to increase -unroll and ffa_block values to speed things up either in comandline of appinfo.xml or comandline.txt file. Example -unroll 8 -oclFFT_plan 256 16 512 -ffa_block 12288 -ffa_block_fetch 6144 With each crime and every kindness we birth our future. |

|

castor Send message Joined: 2 Jan 02 Posts: 13 Credit: 17,721,708 RAC: 0

|

Here's my data for comparison. *MB: GA-Z77-DS3H *GPU: Asus GTX750TI-PH-2GD5 *i7-3770 CPU @ 3.40GHz (4 cores, HT disabled) *Debian Jessie (kernel 3.16.7 with custom config.) Running 2 GPU WUs + 3 CPU WUs:

Tasks: 96 total, 5 running, 91 sleeping, 0 stopped, 0 zombie

%Cpu(s): 0.0 us, 1.4 sy, 54.4 ni, 41.8 id, 0.0 wa, 0.0 hi, 2.3 si, 0.0 st

KiB Mem: 4009492 total, 862888 used, 3146604 free, 904 buffers

KiB Swap: 291836 total, 0 used, 291836 free. 263612 cached Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1738 boinc 39 19 52616 42764 4272 R 99.1 1.1 58:22.24 MBv7_7.05r2550_

1767 boinc 39 19 52616 42760 4272 R 99.1 1.1 40:11.67 MBv7_7.05r2550_

1798 boinc 39 19 53732 43872 4272 R 99.1 1.1 10:02.48 MBv7_7.05r2550_

1792 boinc 30 10 24.330g 223416 91068 S 13.2 5.6 1:23.21 setiathome_x41z

1833 boinc 30 10 24.327g 206180 90784 R 13.2 5.1 0:38.93 setiathome_x41z

1 root 20 0 28752 4864 3084 S 0.0 0.1 0:00.54 systemd

~$ nvidia-settings -q [gpu:0]/PCIEGen -q [gpu:0]/BusRate -q [gpu:0]/GPUPerfModes -q [gpu:0]/GPUCurrentPerfLevel -q [gpu:0]/GPUCurrentClockFreqs -q [gpu:0]/PCIECurrentLinkSpeed -q [gpu:0]/PCIECurrentLinkWidth -q [gpu:0]/TotalDedicatedGPUMemory -q [gpu:0]/UsedDedicatedGPUMemory -q [gpu:0]/GPUCoreTemp -q [gpu:0]/GPUUtilization

Attribute 'PCIEGen' (cvb:0[gpu:0]): 2.

Attribute 'BusRate' (cvb:0[gpu:0]): 16.

Attribute 'GPUPerfModes' (cvb:0[gpu:0]): perf=0, nvclock=135, nvclockmin=135, nvclockmax=405, nvclockeditable=0, memclock=405, memclockmin=405, memclockmax=405, memclockeditable=0, memTransferRate=810,

memTransferRatemin=810, memTransferRatemax=810, memTransferRateeditable=0 ; perf=1, nvclock=135, nvclockmin=135, nvclockmax=1293, nvclockeditable=0, memclock=2700, memclockmin=2700, memclockmax=2700,

memclockeditable=0, memTransferRate=5400, memTransferRatemin=5400, memTransferRatemax=5400, memTransferRateeditable=0

Attribute 'GPUCurrentPerfLevel' (cvb:0[gpu:0]): 1.

Attribute 'GPUCurrentClockFreqs' (cvb:0[gpu:0]): 1189,2700.

Attribute 'PCIECurrentLinkSpeed' (cvb:0[gpu:0]): 5000.

Attribute 'PCIECurrentLinkWidth' (cvb:0[gpu:0]): 4.

Attribute 'TotalDedicatedGPUMemory' (cvb:0[gpu:0]): 2048.

Attribute 'UsedDedicatedGPUMemory' (cvb:0[gpu:0]): 536.

Attribute 'GPUCoreTemp' (cvb:0[gpu:0]): 59.

Attribute 'GPUUtilization' (cvb:0[gpu:0]): graphics=99, memory=72, video=0, PCIe=0

Running one GPU WU + 3 CPU WUs:

Tasks: 105 total, 5 running, 100 sleeping, 0 stopped, 0 zombie

%Cpu(s): 0.1 us, 1.8 sy, 64.5 ni, 31.2 id, 0.1 wa, 0.0 hi, 2.4 si, 0.0 st

KiB Mem: 4009492 total, 548820 used, 3460672 free, 880 buffers

KiB Swap: 291836 total, 0 used, 291836 free. 183296 cached Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

843 boinc 39 19 50048 32968 4224 R 99.0 0.8 2:42.87 MBv7_7.05r2550_

844 boinc 39 19 50048 32984 4236 R 99.0 0.8 2:42.81 MBv7_7.05r2550_

845 boinc 39 19 50048 32988 4232 R 99.0 0.8 2:42.78 MBv7_7.05r2550_

839 boinc 39 19 24.327g 199324 90076 R 26.4 5.0 0:39.36 setiathome_x41z

1 root 20 0 28808 4896 3076 S 0.0 0.1 0:00.37 systemd

~$ nvidia-settings.....

Attribute 'PCIEGen' (cvb:0[gpu:0]): 2.

Attribute 'BusRate' (cvb:0[gpu:0]): 16.

Attribute 'GPUCurrentPerfLevel' (cvb:0[gpu:0]): 1.

Attribute 'GPUCurrentClockFreqs' (cvb:0[gpu:0]): 1189,2700.

Attribute 'PCIECurrentLinkSpeed' (cvb:0[gpu:0]): 5000.

Attribute 'PCIECurrentLinkWidth' (cvb:0[gpu:0]): 4.

Attribute 'TotalDedicatedGPUMemory' (cvb:0[gpu:0]): 2048.

Attribute 'UsedDedicatedGPUMemory' (cvb:0[gpu:0]): 280.

Attribute 'GPUCoreTemp' (cvb:0[gpu:0]): 57.

Attribute 'GPUUtilization' (cvb:0[gpu:0]): graphics=85, memory=67, video=0, PCIe=0

So GPU utilization dropped from 99 to 85 when switched from 2 to 1 WUs. According to my wattmeter, power usage also decreased by one Watt :-) |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

You need to increase -unroll and ffa_block values to speed things up either in comandline of appinfo.xml or comandline.txt file. Is that really an example or what I should use exactly? Also, if it goes in the app_info.xml I assume there must be some soft of <text> </text> that goes around it and that it should be in both the AP and MB sections of the file. Is that correct and if so, what should that text be? In the meantime, I will see what a search can do to provide some enlightenment. You have to remember that what I know about SETI and custom configurations you could put in a thimble and have room left over for a finger. Edit: It looks like what I should use is <cmdline> after <max_ncpus> in both the AP and MB GPU sections. I will try your example and see what happens. |

|

Andrew Send message Joined: 28 Mar 15 Posts: 47 Credit: 1,053,596 RAC: 0

|

@Castor I think I *might* have an explanation for the performance difference between the two PCI-E slots you are seeing.. http://www.theregister.co.uk/2015/04/14/pcie_breaks_out_server_power/?page=1 The difference in performance you see with the card in the x16 vs the x4 in a x16 slot is probably due to the full x16 is directly linked to the CPU while the other PCI-e slots go through the southbridge. http://www.intel.com/content/dam/www/public/us/en/images/product/Z77-blockdiagram_450x408.jpg |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

Since you are running hyperthreaded, the cache, timeslices and execution resources are split. If you free an extra (virtual) core, you may see some improvement. I have some results now from running the GPU with just 6 virtual cores on the CPU. Although the time it takes the GPU to process a task varies, some of the times seem to fall into groups where they are similar. One such group on my server tends to take around 800 seconds of processing time pretty consistently. Based on that I confined what I looked at to tasks that ran about 800 seconds to compare apples with apples. There were 8 in the first group that ran with 7 virtual cores and 7in the second group that ran with 6 virtual cores. The first group averaged 844.73 seconds to complete a task with an average CPU time 158.61 seconds. The second group averaged 820.50 seconds to complete a task with an average CPU of time of 138.68 seconds. Since the sample size, is small I am not sure how really relevant the results are but they would seem to indicate there is a noticeable improvement in the CPU time needed to complete a task running only 6 virtual cores, but that any overall improvement is marginal because the running times are still about the same. At this point I would say keeping the 7th virtual core running a task might be the most productive overall. Your thoughts? |

|

castor Send message Joined: 2 Jan 02 Posts: 13 Credit: 17,721,708 RAC: 0

|

@Andrew: You may be onto something there. I'd guess the actual throughput isn't an issue, but perhaps there are some additional latencies involved, don't know. I anyway decided to keep it in the x4 slot because, interestingly, it appers to also tame some of the coil whine (running the thing with case open now, and the current location isn't too optimal for that). |

|

Andrew Send message Joined: 28 Mar 15 Posts: 47 Credit: 1,053,596 RAC: 0

|

@Andrew: You may be onto something there. I'd guess the actual throughput isn't an issue, but perhaps there are some additional latencies involved, don't know. I anyway decided to keep it in the x4 slot because, interestingly, it appers to also tame some of the coil whine (running the thing with case open now, and the current location isn't too optimal for that). It probably does have an effect as the PCI-E lanes from the southbridge are slower links (gen 2) and have to contend with the shared DMI bus between the CPU and southbridge which doesn't have much bandwidth. There are some articles on Toms Hardware where they are doing SSD testing and they max out the DMI bus before the SSD's max out. AMD's chipsets on the other hand have all PCI-E lanes from the extenal northbridge (ie SR5690 chip) and theres a high throughput interconnect between that chip and the CPU. |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.