Main cruncher dead

Message boards :

Number crunching :

Main cruncher dead

Message board moderation

| Author | Message |

|---|---|

Michel Makhlouta Michel Makhlouta Send message Joined: 21 Dec 03 Posts: 169 Credit: 41,799,743 RAC: 0

|

Some of you might be familiar with my setup, but here it is: Motherboard: MSI Z87 MPOWER MAX AC GPU1 (upper): MSI 780 TF GPU2 (lower): MSI 780 TF PSU: Thermaltake 1275W Platinum I've been away for a month and last time I've checked my system remotely, all was well. I think it was few days back. I just came back home to a dead system. The setup has been running on GPU1 for 10 months and recently added the second GPU few months back. I've came back home to a dead PC. I try to turn it on, it turns on for a split second and the it turns off. I've removed GPU2, problem didn't go away. I've removed GPU1 and it booted on the iGPU. I've inserted GPU2 in the slot of GPU1, the system is also running. It seems that GPU1 is keeping my system from powering up. This rig has been running 24/7 on full load for a long time, and temps on the GPU1 were higher due to its location, but never more than 80C. Any ideas of what's going on? I thought if a GPU is damaged the system would at least boot, leaving it unrecognized or crashing? Could it be the fans? I've had them running on 80%. I wonder if anyone experienced this before, and what was the cause. Hopefully it would be an easy fix and not just another dead card. The tougher question is, could it be because of Boinc? I am keeping the system off for today, I am too tired to put things back together. I apologize fellow crunchers in case I couldn't restore things before my WU deadlines. |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

Hi Michel, I've had a couple of dead 780s in the past year. All were in slot 1. I think the heat as a lot to do with it. The only other question I would have is does putting GPU1 into Slot 2 also prevent the system from booting if GPU2 is already in slot 1? If it does, then yes you have a malfunctioning GPU on your hands. You are going to want to check the MoBo to make sure you don't see any marks around slot 1 that might show burn or flame markings. (check the back side of the board as well) Take a look at the power 6 and 8 pins connectors and make sure there isn't anything there either. Of the 3 dead 780s I've had, 2 burned out and were easily identified due to the burned smell coming from them when I moved them to another room. I replaced the first Mobo as I believed it was damaged after that first incident. On the second 780s, it also burned out but no damage to my Mobo and the PSU is stable. The 3rd 780 was a RMA'ed GPU that didn't last more than 10 days. It did not burn out, rather just stopped working ( I think it was a refurbished unit so that explains why it didn't last long) I don't believe Boinc has anything to do with it. Given it was running at a higher temp for long periods of time, it's not unusual to think that it would eventually lead to a failure. At least to me, we know they guarantee their cards for normal use, but crunching 24/7, 365 will eventually lead to some failure. Hope this helps. Happy Crunching... Zalster |

kittyman  Send message Joined: 9 Jul 00 Posts: 51468 Credit: 1,018,363,574 RAC: 1,004

|

My guess is that GPU1 is shorted, and that's why the PSU kicked on and then off right away. It goes into overload protection and shuts down immediately. I have had this happen here. If the GPU is under warranty, get an RMA for it. "Freedom is just Chaos, with better lighting." Alan Dean Foster

|

Michel Makhlouta Michel Makhlouta Send message Joined: 21 Dec 03 Posts: 169 Credit: 41,799,743 RAC: 0

|

GPU2 works in the same slot where GPU1 was, I've checked for burn marks and didn't find any. The cruncher is back online with one GPU, I will leave it to finish the current workload it got, but won't get any new tasks for now. I'll see if I stop crunching or try to reduce the load to avoid this happening again. It's kind of stupid that they make the slots very close to each others and the SLI cable very short. I will try to get an RMA or sell the other 780 and get a couple of 980's... or I will give up on SLI and keep it simple with a single GPU. |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

You don't need to have the 2 GPU in SLI for Seti. It is only for gaming that you need to connect the 2 GPUs via the SLI bridge. But yes, there definitely needs to be more room between the GPUs to help keep the temp down. Have you looked at placing a fan directly over the GPUs to blow cooler air onto them? I removed the side panel and installed several fans onto it to pull outside air into my case and over the GPUs to help keep the temp down. The 980s definitely run cooler. Good luck with you computer. Happy Crunching.... Zalster |

Mr. Kevvy Mr. Kevvy  Send message Joined: 15 May 99 Posts: 3776 Credit: 1,114,826,392 RAC: 3,319

|

Motherboard: MSI Z87 MPOWER MAX AC If it does turn out to be the motherboard, please give this a read. I will personally never buy an MSI product again.

|

Michel Makhlouta Michel Makhlouta Send message Joined: 21 Dec 03 Posts: 169 Credit: 41,799,743 RAC: 0

|

You don't need to have the 2 GPU in SLI for Seti. This is my gaming rig, but since I travel a lot, it has been running seti most of its time. I've got a 23cm intake side fan that's covering both GPU slots, but no matter what, there's no way to keep the upper GPU from being affected by the lower one. If you've got some fans mounted inside the case, I would be interested to see some pics. |

Michel Makhlouta Michel Makhlouta Send message Joined: 21 Dec 03 Posts: 169 Credit: 41,799,743 RAC: 0

|

Motherboard: MSI Z87 MPOWER MAX AC Interesting read... There are no similar warnings on the page dedicated to the mobo I'm using, and so far nothing points to the motherboard being the source of the problem. The GPU's (MSI as well) are labeled as gaming boards, which might bring up the argument that they are not designed for a 24/7 100% use, since nobody can game that long, and that's one of differences between server grade and consumer grade hardware. However, their "military class" capacitors that they brag about on their site are supposed to survive 10 years in bad conditions (80 degrees) and 18 years if kept below 75. I like those GPU's, they performed well while gaming and were quiet. When I was still using a single gpu setup, the temperatures were always below 70. Adding a 2nd GPU led to an increase of 10 degrees on the upper GPU. |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

If you get another two GPU cards to run in SLI configuration, I would suggest you get blower or reference design coolers. They are the only kind I run anymore because they will not overheat when placed in adjacent slots. Or better still move them one slot apart for even better cooling and get an extra long SLI bridge connector. They are not that hard to find. Cheers, Keith P.S. I also can recommend the 900 series cards for their better power and heat efficiency. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

You don't need to have the 2 GPU in SLI for Seti. Actually I mounted them outside the case. This was my original case here. The 2 140s pushed air into the case over the GPUs location.  After I started to add more and more GPUs I changed to this case. http://www.newegg.com/Product/Product.aspx?Item=9SIA0AJ2FN6559&cm_re=corsair-_-11-139-022-_-Product Was supposed to provide better air flow but it wasn't designed to take the ACX fans into consideration. I found that the ACX fans were pushing hot air back into my case. I played with numerous configs until I finally settled on what I consider a good set up for me.  What I ended up doing is mounting the radiator to the upper part of the case and have the 2 140s pull hot air out of the case thru the radiator. (I know that sounds wrong but give me a minute to explain) The exhaust air fan in the back of the case is 140 that I reversed so it pulls air into the case under the radiator and keeps the temp lower. Next, i reversed the direction of the 2 140s at front so they pull hot air out of the case. Last I removed the side clear panel and installed a 200 mm Fan pushing air directly over the 3 GPUs. Does it help. Somewhat. I still have to limit the upper GPU to 76C with precision X but the temps aren't so bad. 76C, 61C 64C So yeah, not the greatest but manageable. If I removed the middle GPU, the temp of the first drops to 60C so that air impedance causes a lot of issues.   Just some ideas. Hope this helps Happy Crunching... Zalster |

Michel Makhlouta Michel Makhlouta Send message Joined: 21 Dec 03 Posts: 169 Credit: 41,799,743 RAC: 0

|

I made a mistake with the motherboard, should've gone with a gaming one instead of overclocking. It only supports 2xSLI, and those 2 slots are right next to each others. The 3rd slot can't be used as it is x4 and SLI requires a minimum of x8. I already have 4 fans and a radiator on top in push/pull configuration, 200mm front intake, 200mm side intake, 140mm rear exhaust. There's no way to keep the upper GPU fans from taking the hot air from the lower GPU. I can try and use the second GPU in the 3rd slot and forget about SLI when I am not gaming, and see if the temps are manageable. We'll see, it depends on the RMA. If I don't get one, I am back to a single gpu setup anyway. |

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

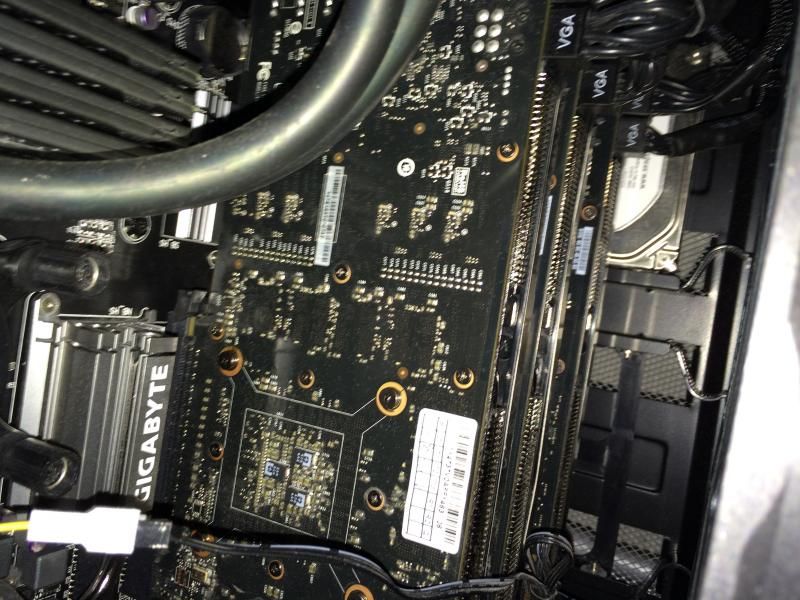

I made a mistake with the motherboard, should've gone with a gaming one instead of overclocking. It only supports 2xSLI, and those 2 slots are right next to each others. The 3rd slot can't be used as it is x4 and SLI requires a minimum of x8. Hi, I live here up in the north (near the Arctic circle). Heat is a problem here too (at least in my computer room...) My GTX 780's have blown their fans out months ago. They're "passive" cooled now. See pictures below: My Rig (currently running on spare part mobo P9X79-WS, while P9X79WS-E is being in service) These are not currently used: (Side panel fans are not powerful enough, One Gtx780 GPU does not fit on mobo)  This is The Fan.  … blowing less than an inch away from the 3 GPUs (Their heatpipes recognizable)  ... And yes. Check The 24 pin connector to the MoBo as some one stated right at the beginning of this thread. Look at the cable pins near the top of your motherboard - mine have melted (plastic burned away, copper 'tubes' visible). Stability restored by connecting a molex extra power cable to the MoBo. p.s. I remember someone asking for pictures of my system -- Well, here they are. In a totally obscured and a wrong place, but anyway. To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.