more power - less credit

Message boards :

Number crunching :

more power - less credit

Message board moderation

| Author | Message |

|---|---|

|

DoryPaul Send message Joined: 21 Sep 99 Posts: 76 Credit: 213,697 RAC: 0

|

Has anyone actuallt noticed... I was looking through the results of my pcs and workunits and found that the more powerful computers (in most cases) get awarded less credit than those that are a little slower. My P4 2.4Ghz scores lower on many occasions than my P3 800 Mhz computer. They have not shared a result as far as i can tell and i know that credit is scored per WU is diff every time, can it be only co-incidence that slower PC score higher? Jus my thoughts... ============ |

Scribe Scribe Send message Joined: 4 Nov 00 Posts: 137 Credit: 35,235 RAC: 0

|

Slower PCs take longer, more CPU time, more points. Faster ones get less points per WU, but do more WU's to compensate! |

|

DoryPaul Send message Joined: 21 Sep 99 Posts: 76 Credit: 213,697 RAC: 0

|

Well i have 3 comps at home, all clones. All the do all day is crunch WU's I have : AMD Athlon XP 2200 (1.6 Ghz) P4 1.6Ghz P3 800 Mhz All comps take around 8 hours per wu. they run messenger, security software, anti-virus on all but one has the abilty to recieve remote control command over internet... over all it seems the P3 800 does more work than the rest... I was thinkin of upgrading it, but its doing fine jus as it is ;P ========= |

slavko.sk slavko.sk Send message Joined: 27 Jun 00 Posts: 346 Credit: 417,028 RAC: 0

|

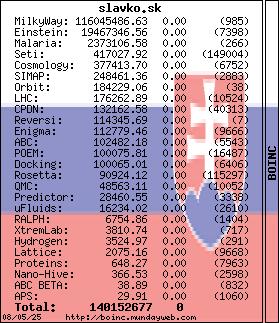

I already wrote about this. My P4 2.66GHz does 10 WU per day, each for 30 credits, which is 300. My PIII 800MHz double proc's does 4,5 WU per day each for 60-70 credits ... 290 credits per day anyway. I'm still thinking, it has not effect to run on faster computer. Do it on more computers does the effect, but not at faster. ALL GLORY TO THE HYPNOTOAD! Potrebujete pomoc? My Stats

|

|

Ingleside Send message Joined: 4 Feb 03 Posts: 1546 Credit: 15,832,022 RAC: 13

|

Taking a quick look, it seems with seti-v4.05 my slowest machine claimes roughly 40 CS/result, the middle 45 CS/result and the fastest 75 cobblestone/result. Looking on the very limited info on granted credit with seti-v4.05, it looks like the 2 slowest normally gets higher than claimed, while the fastest gets lower than claimed. So atleast from this available info, the granted credit of 100 results will be roughly the same, regardless of which machine crunched them. This means there's no big difference between having 2 slow computers crunching 5 wu/day and one fast computer crunching 10 wu/day. |

|

STE\/E Send message Joined: 29 Mar 03 Posts: 1137 Credit: 5,334,063 RAC: 0

|

This means there's no big difference between having 2 slow computers crunching 5 wu/day and one fast computer crunching 10 wu/day. ========== I beg to differer with that Opinion...I will use CPDN & LHC for an example... 1. My P3 850 with turn in a CPDN Trickle Credit every 24 hrs for 75.6 Credits... 2. My P4 3.4 will turn in 2 CPDN Trickles every 7 hr's 15 min ... For roughly 6+ Trickle Credits per Day = about 500 Credits per Day ... 3. My P3 850 takes 1 hr 45 min to crunch a normal LHC WU for roughly 14 WU's a day @ about 9 Credits per WU = about 126 Credits Per Day ... 4. My P4 3.4 will Crunch 2 Normal LHC WU every 40 Min for about 72 WU's per Day @ about 5-6 Credits per WU = 360-432 Credits Per Day Don't tell me 2 slower Pc's will keep up with 1 faster PC, by my calculations I would need 5 or 6 P3 850's to keep up with my Lone P4 3.4 ... :) |

|

Ingleside Send message Joined: 4 Feb 03 Posts: 1546 Credit: 15,832,022 RAC: 13

|

> > Don't tell me 2 slower Pc's will keep up with 1 faster PC, by my calculations > I would need 5 or 6 P3 850's to keep up with my Lone P4 3.4 ... :) > > I didn't say 2 slow 486sx-25 would compete with an 8-way Opteron 850, but rather 2 slow computers crunching 5 wu/day will get roughly the same granted credit as 1 computer crunching 10 wu/day. Not specifically said before, but the wu must also be roughly the same, so you can't compare 10 LHC-wu & 10 CPDN-wu. ;) |

Aardvark Aardvark Send message Joined: 9 Sep 99 Posts: 44 Credit: 353,365 RAC: 0

|

I think it has to do with the benchmarks. BOINC claims credit (basicly) based on your benchmarks multiplied by how long it took to calculate the WU. I don't think that the benchmark system workes very well and thus causes this disparity. The calculations used in the benchmarks don't translate perfectly into the calculations actually used in a given WU. Anyway, that's my take on it. > Has anyone actuallt noticed... > > I was looking through the results of my pcs and workunits and found that the > more powerful computers (in most cases) get awarded less credit than those > that are a little slower. > > My P4 2.4Ghz scores lower on many occasions than my P3 800 Mhz computer. > > They have not shared a result as far as i can tell and i know that credit is > scored per WU is diff every time, can it be only co-incidence that slower PC > score higher? > > Jus my thoughts... > > > ============ > -Aardvark |

Benher Benher Send message Joined: 25 Jul 99 Posts: 517 Credit: 465,152 RAC: 0

|

> ... 2 slow computers crunching 5 wu/day will get roughly the same granted > credit as 1 computer crunching 10 wu/day. Yes, 10 credits per day of approximate same complexity, from whatever source should grant same credit. 5 x Celeron P2 500 -- doing 11:00 per wu = 10/day 1 x P4 3400 (non HT) -- doing 2:20 per wu = 10/day Same credit for Your account, 1/5 credit for each individual P2 celeron machine. >> I don't think that the benchmark system workes very well and thus causes this disparity. Quite correct. The 'whetstone' Floating point benchmark test does 8 seprate timing tests. While it is a standard benchmark of system speed, it does not correlate to how seti math uses the machines. Also the guestimate of how many floating point ops will be performed by each WU is a flat number, not pre-calculated by the servers (27.9 MFLOPS). |

|

Pepo Send message Joined: 5 Aug 99 Posts: 308 Credit: 418,019 RAC: 0

|

> >> [....] > >> > >> I don't think that the benchmark system workes very well and thus > causes this disparity. > > Quite correct. > > The 'whetstone' Floating point benchmark test does 8 seprate timing tests. > While it is a standard benchmark of system speed, it does not correlate to how > seti math uses the machines. Also the guestimate of how many floating point > ops will be performed by each WU is a flat number, not pre-calculated by the > servers (27.9 MFLOPS). As was already mentioned in the "CPU time calculations, hyperthreading" thread (http://setiweb.ssl.berkeley.edu/sah/forum_thread.php?id=4858#31070), the solution would be to measure e.g. the counts of the major FFT loops or so. [edit] This could then measure the math / science crunched, not the spent time. Peter |

Benher Benher Send message Joined: 25 Jul 99 Posts: 517 Credit: 465,152 RAC: 0

|

> As was already mentioned in the "CPU time calculations, hyperthreading" thread > (http://setiweb.ssl.berkeley.edu/sah/forum_thread.php?id=4858#31070), the > solution would be to measure e.g. the counts of the major FFT loops or so. > > [edit] > This could then measure the math / science crunched, not the spent time. > > Peter > Thanks Peter, I'm aware of this...as I have modified seti code to do this very thing ;) Just trying to get them to use it. |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

> Thanks Peter, > > I'm aware of this...as I have modified seti code to do this very thing ;) > > Just trying to get them to use it. The problem is that the measurement yardstick has to be agnostic. SETI@Home uses FFT a lot, not all science applications will ... so, you still have to have a conversion of loops to Cobblestones and have a cobblestone calculator for the other science applications... So, the problem remains. How do you benchmark and yet stay agnostic. This is not a simple problem... if you read up on benchmarking you find that this is almost as elusive as the Holy Grail... I do have a lecture partially converted to HTML. that goes into this topic some ... But I have probably 50 articles streching back to the 70's that talk to this issue and we usually sum it up as lies, dratted lies, and benchmarks... The only "perfect" benchmark that is usable is the work load to be measured. Unfortunatly for us, we have a "spread" of work loads and processes and yet we are trying to establish a measurement system that does not rely on one specific work load that may be atypical of the work in the various science applications. Try reading the lecture and if you have more questions, I can try to answer them ... |

Benher Benher Send message Joined: 25 Jul 99 Posts: 517 Credit: 465,152 RAC: 0

|

> > So, the problem remains. How do you benchmark and yet stay agnostic. This is > not a simple problem... if you read up on benchmarking you find that > this is almost as elusive as the Holy Grail... > Paul, My method is...calculate # secs for given WU (on given machine). Retrieve # FPops estimate given with WU (part of the WU file from server) Machine true seti_only_benchmark = #fpops/#secs. To give credit, simply do the reverse Credit seconds submit to server = #secs * (seti_only_benchmark/currentFPbenchmark) Code is setup to generate separate benchmarks on a per-project basis (values stored in project class structure) |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

Benher, > My method is...calculate # secs for given WU (on given machine). > Retrieve # FPops estimate given with WU (part of the WU file from server) > > Machine true seti_only_benchmark = #fpops/#secs. > > To give credit, simply do the reverse > > Credit seconds submit to server = #secs * > (seti_only_benchmark/currentFPbenchmark) > > Code is setup to generate separate benchmarks on a per-project basis (values > stored in project class structure) Ok, I guess I am still dense ... I still don't see it ... :( Can you lead me by the hand? 1) Project calculates Ops, a static "magic" number based on the WU 2) WU and static number DL to participant's machine 3) Result generated, compute time is multiplied by "magic" number giving Cobblestones Is my understanding of what you have proposed... Now, if that is not right I need it recast. And now how two different projects would calculate the "magic" number, say SETI@Home and cp.net ... One other "fly" in the ointment is that the SETI@Home WU are not consistent in processing time due to Angle Rate issues and noise. So, I am still at a loss as to why those would not color the credit granted. All I can see is that the current "magic" number which is the Whetstones & Dhrystones is replaced by a different one. There is no inherent "goodness" with a different number, it just replaces one with a different one and I fail to see how that is going to make it all better... Anyway, I still don't get it ... |

Benher Benher Send message Joined: 25 Jul 99 Posts: 517 Credit: 465,152 RAC: 0

|

> Benher, > > > My method is...calculate # secs for given WU (on given machine). > > Retrieve # FPops estimate given with WU (part of the WU file from > server) > > > > Machine true seti_only_benchmark = #fpops/#secs. > > > > To give credit, simply do the reverse > > > > Credit seconds submit to server = #secs * > > (seti_only_benchmark/currentFPbenchmark) > > > > Code is setup to generate separate benchmarks on a per-project basis > (values > > stored in project class structure) > > Ok, I guess I am still dense ... I still don't see it ... :( > > Can you lead me by the hand? > > 1) Project calculates Ops, a static "magic" number based on the WU > 2) WU and static number DL to participant's machine > 3) Result generated, compute time is multiplied by "magic" number giving > Cobblestones > Paul, The goal is to figure out how fast a particular machine is (at seti) to give it proper credit. So, I calculate how long the servers think the machine should take for a given WU (the number you see in the estimated time to completion column), and I divide that by the time it actually took (almost allways a lower number). This then provides a multiplier to use to multiply the server expected credit number by, to grant the credit it should get. In short...(time_predicted/actual_time) * original_server_credit. -------------Table of examples---------- Mfg. Model O/S Actual Pred Whet Dhry Ratio Actual/Pred Amd Athlon XP 3200+ Win 98se 2:40 4:11 2064 4757 1.569 Amd Duron 800 server 2K 8:14 10:22 748 1729 1.259 Amd Duron 1762 win 2K 3:45 4:43 1638 3763 1.258 Amd Duron mob 800 win 2K 8:52 11:10 694 1687 1.259 Intel P2 Cel 500 Win 98se 14:30 18:04 429 1056 1.246 Intel P3 500 Win 98se 12:30 18:31 422 1019 1.481 Intel P4 1800 Win XP hom 4:56 8:26 919 2400 1.709 ----------------------- Here is the server source code for calculating "requested credit" // somewhat arbitrary formula for credit as a function of CPU time. // Could also include terms for RAM size, network speed etc. // static void compute_credit_rating(HOST& host, SCHEDULER_REQUEST& sreq) { double cobblestone_factor = 100; host.credit_per_cpu_sec = (fabs(host.p_fpops)/1e9 + fabs(host.p_iops)/1e9) * cobblestone_factor / (2 * SECONDS_PER_DAY); } ... lots of lines of source... srip->claimed_credit = srip->cpu_time * reply.host.credit_per_cpu_sec; |

|

Bart Barenbrug Send message Joined: 7 Jul 04 Posts: 52 Credit: 337,401 RAC: 0

|

fabs(host.p_fpops)/1e9 + fabs(host.p_iops)/1e9 shows that Drystones and Whetstones are simply averaged. So basically half of the time is treated as floating point work, and the other half as integer work. So for example if your computer actually spent say 80% of its time on floating point computations, and only 20% on integer operations, 30% of your floating point work would be credited according to your integer benchmark results. So this will mostly affect machine which have quite different scores for floating point and integer. It also depends on how the work for a work unit is distributed over floating point and integer work, and this is where different boinc projects may differ. So a solution would be to assign a ratio to each project indicating how much of the operations spent on a work unit are floating point operations versus integer operations. So if for example your average seti WU would use (and this is hypothetical) mostly floating point FFTs (let's say 80% floating point and 20% integer work), but CPDN would use mostly integer (let's say 70% integer, and 30% floating point), you would have a project setting that would be 0.8 for seti and 0.3 for CPDN (this would be the "magic number" suggested earlier). Taking this weight into account (how, see below) still doesn't account for variations of the composition of a work unit's computation (in terms of floating point and integer work) within a project (due to angle rate issues etc.), but at least it could provide a way to weigh the work more precisely at least in the aspect of floating point versus integer, without being hampered by differences between projects (which are accounted for in the ratio now). As credits are computed currently, there could be one benchmark value (which is the average of the floating point and integer measurements): the distinction between them is not really used at the moment. Let's call the parameter project.f_weight. At first glance, the formula above might then become something like: (project.f_weight * fabs(host.p_fpops) + ((1.0-project.f_weight)*fabs(host.p_iops))/2e9 where project.f_weight is a number between 0 and 1 (leaving 1.0-project.f_weight as the integer weight). But this doesn't work, since the slower a computer is on for example floating point, the more of the time is actually spent in floating point computations. Looking at this in a little more detail: if a work unit needs a certain amount of operations, say w, and a certain part p (p between 0 and 1, this is the project setting project.f_weight) of those are floating point operations, the we know that p*w operations are floating point operations and (1-p)*w are integer operations. p can be determined by profiling the computation of a number of work units for a given project and seeing how many operations are floating point, and how many are integer. We also have out benchmark results: f (=host.p_fpops, telling us how many floating point operations the client can perform per second) and i (=host.p_iops giving the number of integer operations per second). We then know that the time spent on floating point operations is p*w/f and the time spent on integer operations is (1-p)*w/i. So the total time spent on the work unit is w*(p*/f+(1-p)/i). When a result comes back, it is actually the value of w that we want to know and the total time spent that we do know. At least that's my assumption: that w is a better indicator of the amount of science done than the time spent on the work unit, and this is debatable, I think. So let the computation time that gets reported back for a work unit be t. Then from w*(p*/f+(1-p)/i)=t we can derive w=(f*i/(p*i+(1-p)*f))*t. So the factor to compute from a given time t, the number of operations w spent is: f*i/(p*i+(1-p)*f) which is what could be used in the formula to compute host.credit_per_cpu_sec instead of just averaging host.p_fpops and host.p_ipops. This does make things a bit more complicated though (especially if you would also want to weigh in network time etc.), and it doesn't account for CPUs possibly being able to perform floating point and integer in parallel etc., but at least it would use the two benchmark numbes in a more meaningful way than they currently are. |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

Benher & Bart, I am still paddling madly, but the navigator did say that land was 5,000 Nmi away ... I kinda know how you are deriving the numbers (though Bart lost me someplace), but I am still in the dark as to how your ratio solves any problems. I know we have "proved" several times that there are problems with both the benchmarks and they way that they are used. And I used the word "proved" in the sense that even if we could not make a perfect analysis the anecdotal data was pretty conclusive. Just like we now have a case for a dramatic increase in processing time for SETI@Home WU over the last couple of releases. At any rate, for me, the next step is that I would like to understand what problem we are sloving and how your system solves that problem. I am assuming at the moment you are trying to solve the scaling issue between fast computers and slow computers. So, I may have that wrong. If that is correct, can you use results from the results posted to show how that would work? |

|

Bart Barenbrug Send message Joined: 7 Jul 04 Posts: 52 Credit: 337,401 RAC: 0

|

Let me first try and rephrase what I understand from Benher's (to see if I got it), and then get to my post (which addresses a slightly different, though related issue). This is my understanding of Benher's post: 1) use the current way of predicting how long a work-unit will compute. 2) do the actual computation and measure the time 3) divide the two to derive a scale factor which you can apply to __a) improve the next predictions to be able to better predict how long a WU will take __b) use also as a scale factor for the claimed credit Imho 3b doesn't solve much (but correct me if I'm wrong), since the multiplier only says something about the accuracy of the prediction, so using the multiplier for claimed credit only brings this inaccuracy into the claimed credit instead of solely relying on the actual time measured. It would only help if we knew that the prediction was a more accurate way of measuring the work done than the actual time spent, but I doubt that that is the case. It would be nice though to use the multiplier to improve the prediction. Further explanation of my post: I'm actually addressing a slighly different issue, namely that there now exist benchmark numbers for floating point computation and integer computation, but these aren't taken into account in a meaningful way. The new formula I propose for weighing the two benchmark numbers (actually dependent on the amount of floating point versus integer computation done for a typical work unit in a given project) improves the credit system on this point alone. But this may improve the balance between credits given for the same work unit processed on different systems (which can now be quite far apart). Let me illustrate with an example. Suppose there is a boinc project where a work unit needs 80 floating point operations and 20 integer operations. For this project the value of "p" in my formula would therefore be 0.8. Suppose also that I have a client that can do 1 floating point operation per second and 5 integer operations per second (the latter two are the benchmark numbers now used: host.p_?pops, respectively also denoted as f and i in my formulae). That would mean that my computer would take 80 seconds to do the floating point work (80/1) and 4 seconds to process the integer operations (20/5). So total time reported would be 84 seconds. The current way of determining claimed credit just averages the two benchmark scores and applies that to the time spent (with a few constants applied too). So the benchmark average is 3 (average of 5 and 1), which multiplied with the time spent for the work unit results in 252 (=3*84) operations worth of credit. The actual work for the example was actually only 100 operations (80+20), and another client may come up with a different claimed credit altogether: suppose client 2 can do 4 floating point operations a second, and 2 integer operations per second. Total time spent for this client will be 80/4 + 20/2 = 30 seconds, and this is what will be used to compute claimed credit. According to the current way of working, this time spent will be multiplied with the average of the two benchmarks (3 again), now resulting in a claimed credit of 90 (=3*30) operations worth of credit. Quite a big difference compared to the 252 claimed by client 1. So machines having different ratios of floating point versus integer speed result in claimed credit that can be quite different for the same work unit. What my formula does is take all this into account. For the example above, the project's "p" would be 0.8. For client 1, we have f=1, i=5 and t=84, whereas for client 2 we have f=4 and i=2 and t=30. Putting that in my formula w=(f*i/(p*i+(1-p)*f))*t yields the original 100 operations for that work unit for both clients, so they claim the same amount of credit. Or to put it differently, factor f*i/(p*i+(1-p)*f) results in 1.19 operations worth of credit per second for client 1 (which multiplied by the 84 seconds yields the 100 operations), and 3.33 operations worth of credit per second for client 2. This factor is so much higher for client 2 because it can process floating point so much faster and this is important since floating point is the major part of a work unit's work for this project. Another project may be much more reliant on integer computation. Let's say that a work unit for this second project needs 10 floating point operations and 90 integer operations. My two clients from before would report 10/1+90/5=28 seconds and 10/4+90/2=47.5 seconds of time spent respectively, resulting in claimed credit of 84 (28*3) and 142.5 (=47.5*3) respectively using the current way of computing credit. So now client 2 claims more credit (whereas for the first project it was client 1). Again, my formula recovers the original 100 operations for both clients, yielding the same amount of credit claimed. Note that for this project, the value of p is 0.1, so the two benchmark values get combined differently into the "operations worth of credit per second" for this project. In this project, client 1 gets 3.57 operations worth of credit for every second spent, and client 2 only 2.1. That is because client 1 is so much better at integer computations, which is important for this second project. So while this improved formula would not solve every issue with the credit system, it should improve the imbalance in claimed credits between clients that have different ratios of speed of processing floating point versus integer. And it also provides a means to differentiate between the kind of work (in terms of integer versus floating point) done for different projects. |

|

Bart Barenbrug Send message Joined: 7 Jul 04 Posts: 52 Credit: 337,401 RAC: 0

|

Not to make the other post even longer: my new formula is also far from perfect. It only splits up the work done in integer versus floating point computations. First: there are different integer operations which run at different speeds, so the mix of which integer operations are used and in which quantaties is also important (you can refine the benchmark to take that into account, but it will be increasingly difficult to determine accurately what the mix for a typical work unit of a project will be). And the same goes for floating point. Second, there are other types of calculation as well. "Control" calculations for example ("if", "while", "switch" etc.) and they take time as well, so where do those get lumped in. Third, this does not take cache behavior, memory speeds, and all such things into account which also influence the computation times. Fourth, it may also be the case that a machine might have a floating point unit sitting next to an integer unit, meaning that if an integer and floating point operation would be close enough together in the program, they may actually be executed in parallel, rather than sequencially (which is what adding the floating point time to the integer time in my computations corresponds to; anybody tried to determine a proper benchmark for a vliw machne yet?? ;) ). But since we do have two benchmark numbers, and they happen to be for floating point versus integer, at least we have to measure for a work unit how much of its work is floating point, and how much integer, otherwise having two different benchmark numbers isn't really meaningful (just adding them doesn't even correspond to 50% floating point operations and 50% integer: there's an inverse relation there as I tried to show). And if you have that ratio for a work unit of a given project, I think you arrive at the formula I wrote down in my earlier post, which at least uses the two different benchmark numbers in a more meaningful way. It's still gives an approximation only of the amount of work done, but a better one, I think. It not so much corrects for the difference between fast and slow computers (just multiplying seconds spent with operations/sec will do that trick), but for the different relative speeds with which computers perform their integer versus their floating point work. If we can find a correlation between that ratio for a given computer and how its claimed credit compares to the claimed credit of others, this new way of computing claimed credit might help. For example this WU has had two similar computers working on it (each claiming 38 or so credits), whereas the other computer not only claimed a different amount of credit, but also has quite a difference in processing speed of integer versus floating point (roughly 2.8 faster integer than floating point for this other computer, versus roughly factor 1.5 for the other two). Maybe it's this difference that causes the difference in claimed credit (but maybe it has another cause altogether: more WUs should be analysed). Also [url=http://setiweb.ssl.berkeley.edu/sah/workunit.php?wuid=2978186]this<a> work unit seems to indicate something similar. Could it be true that computers that have slow floating point benchmark results relative to their integer benchmark results tend to claim lower credit?. Which would mean that seti's work units mostly perform integer operations, and not so much floating point (so seti would have a "p" value lower than 0.5 in my formula) and that therefore the claimed credit gets dragged down by a slow floating point benchmark, even though low floating point performance doesn't matter much for seti processing. If this is a trend that can be really established on the basis of analysis of many more work units, and maybe with a different relation (but still with a clear correlation) for another project which relies more on floating point computation, going for the formula I mentioned might be worth it (once more pressing issues have been solved of course). Paul: you can have a look in your results at the claimed credit for your Mac (which has an integer benchmark score of about three times its floating point score) versus that of your pentiums (which score less than twice as high for integer as for floating point). If I'm right, your Mac will consistently claim lower credit per unit than your P4s (though again: that might also be related to other differences between the machines). Do you see that trend? |

Benher Benher Send message Joined: 25 Jul 99 Posts: 517 Credit: 465,152 RAC: 0

|

Bart, I've done profiling of the running app using Intel Vtune. Seti is virtually ALL floating point. There is some integer for address offset calculations into buffers (indexing), and a few other places, but virtually all FP. In fact the estimated WU completion time calculation only uses the FP_benchmark score. One of the big time stealers (~20% of WU time) that does almost no real processing is a function that exchanges FP numbers in a buffer (called bit reversal). This has high CPU overhead because most of the exchanges are not in the L1 or L2 cache. Example: so on my P4 2.4Gig machine a memory exchange in cache which would require 8 cycles takes ~520 cycles for two [not in cache] memory reads. And there are millions of these exchanges. |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.