amd vs intel

Message boards :

Number crunching :

amd vs intel

Message board moderation

Previous · 1 · 2 · 3 · 4 · Next

| Author | Message |

|---|---|

|

Brian Silvers Send message Joined: 11 Jun 99 Posts: 1681 Credit: 492,052 RAC: 0

|

Thanks for explaining that MUCH better than I did... LOL!

|

skildude skildude Send message Joined: 4 Oct 00 Posts: 9541 Credit: 50,759,529 RAC: 60

|

Me thinks the point has been lost. Don't care if you are Optimized on anything. Don't care what speed processor you have. Don't care how the credit is applied. The POINT of thistopic is that intels Notoriously get lower average claimed credits than AMD processors. Plain and simply put I notice a huge bias low for for Intel processors. and a slightly higher apple claimed credit. Now you may wish to speculate on the futures market for mangos, but what I am pointing out and would like explained(by somebody that actually does the work for seti or isn't blowing smoke up my butt) as to how come their is such a discrepancy. Certainly, I am not the only one to notice this discrepancy. Its really easy to see, too. go to your results pages and look at a completed result. if their is a result with a significantly higher claimed credit i would be that its an AMD processor. This isn't rocket science, it is statistics. Not one person has posted gave a valid reason for this. I have read how optimizing.., how credit is applied..., etc what I havent seen is why? here is an http://setiweb.ssl.berkeley.edu/workunit.php?wuid=34274464 guess which results were from an intel vs. AMD. If you use what I said its pretty clear which is which |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13746 Credit: 208,696,464 RAC: 304

|

I have to assume that something in the BOINC process is hinky since all processors should report back with genenrally the same claimed credit. They don't. From the Participate link (and sublinks) A BOINC project gives you credit for the computations your computers perform for it. BOINC's unit of credit, the Cobblestone 1, is 1/100 day of CPU time on a reference computer that does both 1,000 double-precision MIPS based on the Whetstone benchmark, and 1,000 VAX MIPS based on the Dhrystone benchmark. Because they use Whetstones in the calculations they will bear little resemblance to reality as the Whetstone itself is not an indicator of actual performance. eg. However "hinky" the credits may be, everyone that proceses a particular WOrk Unit gets the same credit for processing that Work Unit. So no one is loosing out, no one is getting a big win. So as imperfect as it is, there's nothing worth getting even remotely worked up about. Grant Darwin NT |

|

Brian Silvers Send message Joined: 11 Jun 99 Posts: 1681 Credit: 492,052 RAC: 0

|

Me thinks the point has been lost. No, I think everyone got your point. The problem here is that, as others have told you, the benchmarking is FLAWED. This means that you're crying foul over something that has already been discussed in the past.

|

Mr.Pernod Mr.Pernod Send message Joined: 8 Feb 04 Posts: 350 Credit: 1,015,988 RAC: 0

|

skildude, I think you have picked a bad example. the Intel machines in your example are all Hyperthreaded cpu's, which means they are running 2 wu's at the same time on 1 physical cpu. the high-scoring AMD is a dual core system, with, in effect, 2 physical cpu's, so it is running 1 wu per physical cpu. to see how they even out, check the hosts in the example you mentioned on the Pending Credit Calculator by AndyK. |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

The POINT of thistopic is that intels Notoriously get lower average claimed credits than AMD processors. If you look at the overall numbers for the two processors you will likely see that the AMD chips have higher scores. So, higher claims. My Xeons have "x" capability, a similar AMD chip will report a benchmark score, but, because of the way that the benchmark system currently works, the Xeon's scores will be LESS than they should be (up to divide by 2). If you wade through the proposal I wrote up in the Wiki you will see a summarization of most of the flaws in the benchmark system. It also have links out to some other references. There is also a thread discussing that proposal with ADDITIONAL links to resources .... happy reading ... BOttom line, the basis for the credit claims is fundamentally flawed, known flawed, and due for an overhaul ... there is some work in the wings ... time will tell if it will be enough ... |

skildude skildude Send message Joined: 4 Oct 00 Posts: 9541 Credit: 50,759,529 RAC: 60

|

I have to agree with the last post. Previous posters presented that their "optimized" processes brought in lower claimed credit yet more credits per day. I assumed in the beinging that each WU had an intrinsic value based on quality and size of a returned result for each WU. Clearly, (pun intended) Seti is using vague benchmarks that are not = to the task of rating WU returns. Truly, as you said, an overhaul with the granting system is needed |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

I have to agree with the last post. Previous posters presented that their "optimized" processes brought in lower claimed credit yet more credits per day. I assumed in the beinging that each WU had an intrinsic value based on quality and size of a returned result for each WU. Clearly, (pun intended) Seti is using vague benchmarks that are not = to the task of rating WU returns. Truly, as you said, an overhaul with the granting system is needed The biggest problem I have with Paul's proposal (sorry Paul) is that the only way to get a really solid benchmark is to exactly duplicate the kind of operations that a project does. That means that the benchmarks aren't going to be comparable across projects (SETI credits won't compare with Einstein credits) because a SETI benchmark isn't like an Einstein benchmark. ... and I think if you look at 100 work units, that it averages out anyway: sometimes my results will be matched with "optimistic" machines who claim too much, and sometimes with pessimistic hosts that claim too little. So the benchmark produces a rough number, my claimed credit is roughly what the WU is worth, and after a week's crunching, it's all about the same. I also worry when we start applying correction factors that we might end up accidentally building a servo with positive feedback -- that the credit system will get out of range and tend toward the rails. |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

The biggest problem I have with Paul's proposal (sorry Paul) is that the only way to get a really solid benchmark is to exactly duplicate the kind of operations that a project does. Nope, I think we are in violent agreement. My thesis is that the only way to benchmark is to use the actual applications. Without testing, my guess is that the newer FLOPS counting, assuming reasonable instrumentation could still reach a 1% load on processing. Also something I am not that wild about. But, I also feel that most projects are going to have roughly equivelent numbers for the simple fact that they are doing roughly equivelent operations (PrimeGrid being a notable exception). |

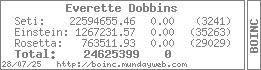

Everette Dobbins Everette Dobbins Send message Joined: 13 Jan 00 Posts: 291 Credit: 22,594,655 RAC: 0

|

The biggest problem I have with Paul's proposal (sorry Paul) is that the only way to get a really solid benchmark is to exactly duplicate the kind of operations that a project does. > Hello paul I use optimized clients and have both AMD and Intel and they both kick butt as far as # of wu they complete. View computers are enabled maybe you can use to compare.

|

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

Heck, and I was just hoping to have 10 ... color me jealous ... |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

The biggest problem I have with Paul's proposal (sorry Paul) is that the only way to get a really solid benchmark is to exactly duplicate the kind of operations that a project does. That's the other part of my thinking: it's supposed to be "SETI@HOME" not "ACCOUNTING@HOME" -- I wouldn't want to give up 1% to get more accurate credit on an individual work unit. ... and I think that, in the long run, it doesn't matter anyway. If I get more than I claimed sometimes and less than I claimed others, it averages out. |

Tern Tern Send message Joined: 4 Dec 03 Posts: 1122 Credit: 13,376,822 RAC: 44

|

I wouldn't want to give up 1% to get more accurate credit on an individual work unit. This discussion is also taking place at Rosetta, where there is no current "redundant issue of results" - if you look at it from the project's view, that 1% is a) a tiny amount of the CPU power available, and b) evenly applied to everyone, so it's not "you" who would be running 1% slower, it's _everyone_, thus keeping the board even. The advantages to an improved benchmarking system are not limited to the credits alone; it will allow the projects to eliminate the portion of redundancy currently in place only to prevent credit cheating. Imagine SETI being able to issue three, or possibly even two, results per WU instead of four - that would give an immediate 25-50% gain in overall throughput, VERY easily compensating for a 1% increase in time needed for each result. Two is plenty of redundancy for the science, due to the way "strongly similar" is judged. SETI_enhanced is about to make each result take 4-10x as long, as a result of doing more in-depth analysis on the raw data. Even with the number of participants doubling (estimate) due to Classic closing, this means fewer results/day, by 2-5x. Issuing 3 results instead of 4 (because of using flop-count rather than the current benchmark) seems very doable. Even if the 1% estimate is off by a factor of 10, and the cost is 10% (my bet is closer to the 1%), when you can gain 25% from doing it... well, why not? And there's the fact that the whole AMD/Intel, Windows/Linux/Mac, etc. issue becomes moot. A flop is a flop, as opposed to a Whetstone or a Dhrystone. |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 20331 Credit: 7,508,002 RAC: 20

|

I wouldn't want to give up 1% to get more accurate credit on an individual work unit. Agreed, although the credits system is what seems to drive a lot of people to contribute an awful lot more than just an additional 1% (or whatever) to compensate for whatever credits overhead. I'm in this just for the science. But, hey, if a little "marketing" boosts the contributions then so be it for pandering to "human nature". ...it will allow the projects to eliminate the portion of redundancy currently in place only to prevent credit cheating. Imagine SETI being able to issue three, or possibly even two, results per WU instead of four... I wasn't aware that there is any redundancy in place for credits checks. A minimum of three results are required to give two in agreement to then show up a third incorrect result. Four are sent out to speed up the turn-around time for the proportion of WUs that lose one result. ...flop-count rather than the current benchmark) seems very doable. ... So how do you accurately add up the data-dependant trig flops counts? And what of the development overhead pushed onto every boinc project for the required accounting code write and debug? (Instead of once only in the boinc infrastructure?) Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

There is a saying: A man with a clock knows what time it is. A man with two clocks is never sure. |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 20331 Credit: 7,508,002 RAC: 20

|

There is a saying: Is that translated from some old Chinese saying? And what of Captain Fitzroy and his menagerie of clocks (in the days before GPS)?... Cheers, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

There is a saying: I'm not sure of the origin, but the other version is: Never go to sea with two chronometers, take one or three. Navigation was at one time a true art, the practice of which was noble and worthy. Now you just pull out a little battery powered box full of electronics and you know exactly where you are, in any weather. |

Tern Tern Send message Joined: 4 Dec 03 Posts: 1122 Credit: 13,376,822 RAC: 44

|

...it will allow the projects to eliminate the portion of redundancy currently in place only to prevent credit cheating. Imagine SETI being able to issue three, or possibly even two, results per WU instead of four... Well, SETI requires two results that are "strongly similar"; I do not know how many times one result is only "weakly similar", so I don't know how many "misses" there are - the project I imagine does. And what the percentage of "lost" results is I also don't know. These are questions they would have to decide, and other projects have different sets of conditions. COULD they issue three instead of four? Just judging from my own results that I've seen, yes - it's rare that the fourth result is actually needed. The flop-counting code is in the science apps, it's up to the projects to do this or not; they can continue to use the benchmarks if they wish. I have no idea what the actual implementation is. The reporting code is in BOINC V5.something and above, no "cost" unless it's called, and I would guess that it takes much less time than running benchmarks does. Some projects may well stay with the current system as being better for them, but for others, the new system may give great benefit. |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 20331 Credit: 7,508,002 RAC: 20

|

...flop-count rather than the current benchmark) seems very doable. ... Yes they could and this was discussed at length at Berkeley. To reduce the number and delay of pending credits, a fourth WU is sent in anticipation that a proportion of WUs are lost. At that time, an additional WU was required more often than not so the overhead of always sending the extra WU was small. Search back through the threads for the numbers. Of course, times and numbers change and the stats today may show we lose fewer WUs. It would be interesting if the stats fanatics could track and chart that. ;-) The flop-counting code is in the science apps, it's up to the projects to do this or not ... and I would guess that it takes much less time than running benchmarks does. You can easily add code to count up the number of discrete FLOPS executed that you have in your code. However, you have little idea of how many flops are used by any library routines called, unless you add them up with a debugger. Worse still for FLOPS-counting accuracy, most math functions use iterative methods that repeat a number of times until the result is accurate enough (error between iterations has reduced to 'small enough'). This iteration process varies on what data is given. So FLOPS accounting is still inaccurate and you've got additional developer effort for all projects. Hence the calibration ideas promoted by Paul Buck. Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

|

Ingleside Send message Joined: 4 Feb 03 Posts: 1546 Credit: 15,832,022 RAC: 13

|

So FLOPS accounting is still inaccurate and you've got additional developer effort for all projects. Neither CPDN nor Folding@home needs to add any flops-counting, therefore it's not correct all projects needs to use time on this. A quick look on BOINC alpha, there the same computer can crunch the exact same wu more than once, reveals more than one HT-computer with over 30% variation in cpu-time on some of the wu, and Eric Korpela's Mac has 48.5% variation. For majority of wu the variation is of course much smaller, but since re-running the same wu on same computer can give over 30% variation, how would you expect to calibrate anything? |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.